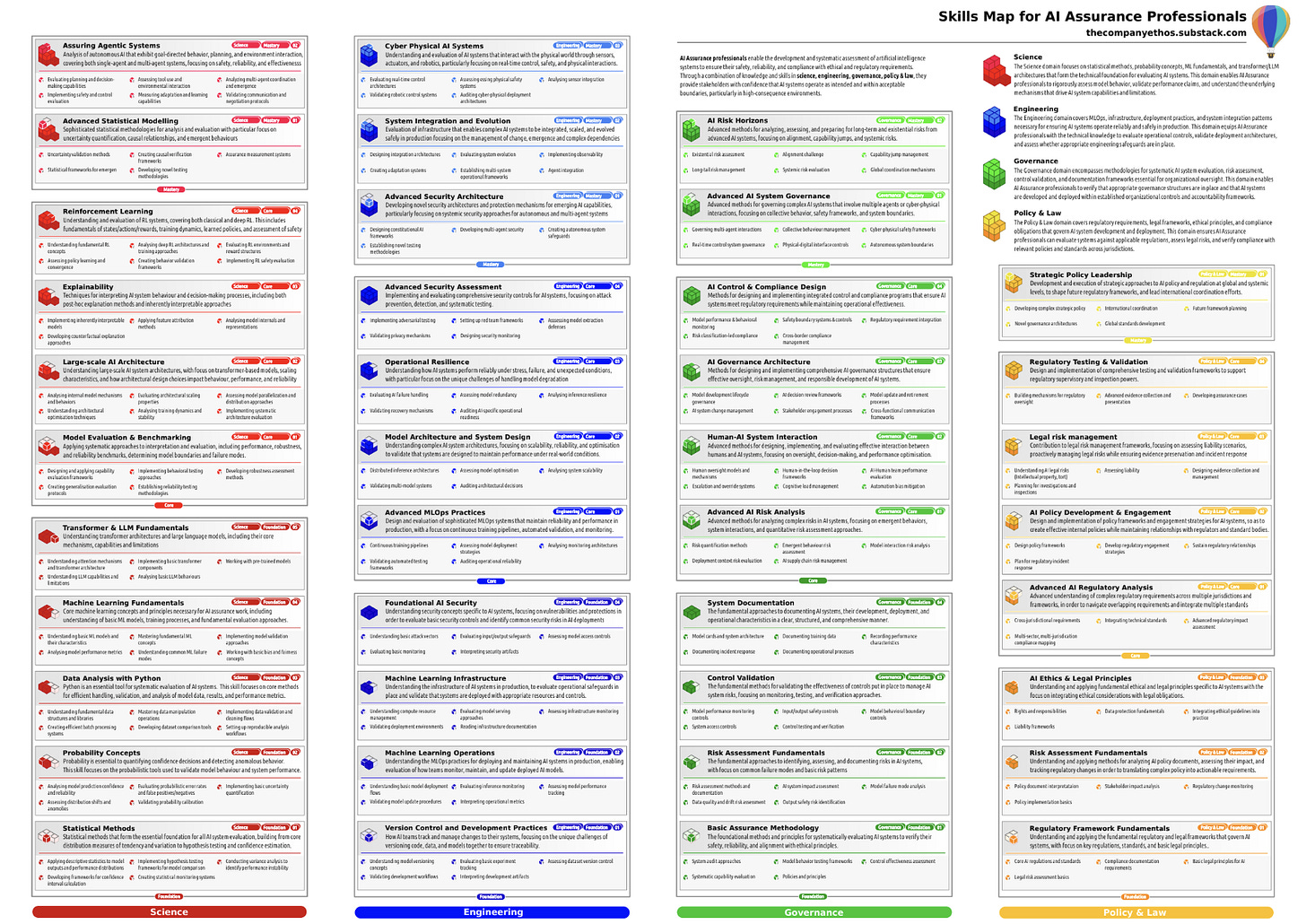

Your Map to the AI Assurance Profession

A roadmap of 200 skills from foundation to mastery across science, engineering, governance, policy and law

A year ago, I thought that my twenty years of experience in systems engineering and regulatory assurance had prepared me for anything in technology. Then I stepped into leading Responsible AI Assurance for Amazon Web Services and discovered a whole different world. I’ve thoroughly enjoyed the challenge of learning this rapidly changing space, and I wanted to share some lessons and insights if you’re on a similar learning journey.

So today, and over the next few articles, I’m going to walk through a map I’ve put together, one that I really wished for when I started. It’s a guide on how to develop the skills you need for expertise in AI assurance, broken down in four different domains and skills at different levels of expertise, along with pointers to the best resources I know of to develop each skill. I've taken everything I've learned (and many topics I plan to learn) and turned it into what I hope works for you as a framework for professional development.

You can download the full-size skills map poster and subscribe to future updates at the link below.

You know what I love about this field? It’s that there's no one "right" way to develop your expertise. Maybe you'll focus on the technical side, excelling at model evaluation and interpretability or working with engineering teams on security of AI systems against emerging threats. Or perhaps you'll specialise in risk frameworks, governance and policy. Whatever path you take, these resources are here to help as you choose your own adventure. But let me first walk through the overall structure of four major domains and 40 topics covering foundational, core and master-level skills.

Science

First up is Science. You just really can't be effective evaluating AI systems without an understanding of the science of machine learning, including how models are designed, built and tested. You won't need to invent new algorithms, but to be effective, you will need to build an understanding of statistics, algorithms, machine learning architectures and experimental design. You'll need to collaborate with data scientists and together scrutinise results in a scientific method. AI Assurance is never about ticking boxes – it's about deeply understanding how these systems work and how to test them properly. And to do that requires skills and knowledge in data science at multiple levels.

Think of it as learning to read increasingly complex stories. At the foundation level, you're learning the basic language of AI – statistics, probability, and fundamental machine learning concepts. You're getting comfortable with different AI architectures too – understanding how a basic neural network differs from a transformer model, or why we might choose reinforcement learning for one task but supervised learning for another. You can look at a model's confusion matrix and understand what it really means when it shows high precision but low recall in minority classes – recognising that this might signal a fairness issue hiding behind seemingly good overall performance metrics.

As you move to the core level, you're not just reading the story but analyzing the plot. You're designing comprehensive evaluation approaches and diving deep into how different AI architectures process information – from how attention mechanisms work in language models to how computer vision systems build their understanding of images layer by layer. You're working with sophisticated interpretability tools, understanding attention patterns and feature importance, and spotting potential biases in test results. When a team says their model achieves "state-of-the-art performance," you know to dig into their evaluation methodology, checking for distribution shifts between test and deployment scenarios, and examining performance stability across different subgroups.

By the time you reach mastery, you're helping write new chapters. You're developing novel ways to evaluate emerging AI capabilities, creating new testing methodologies for capabilities we've never seen before, and working with research teams to understand the frontiers of what's possible. You're tackling complex questions about AI interpretability – not just using existing tools but helping develop new approaches to understand how advanced models reason and make decisions. You might be investigating how different architectural choices affect model behavior, or developing new frameworks for testing emergent capabilities in large language models. At each stage, your scientific understanding deepens from "what's happening" to "why it's happening" to ultimately "what could happen next." It's a journey from being able to validate test results to designing sophisticated experiments to eventually helping shape how we evaluate and understand AI systems altogether.

Engineering

Next up is Engineering. This is where we bridge the gap between theoretical understanding and practical implementation. You need to understand how AI systems are actually built, deployed, and operated in the real world. It's not about becoming a machine learning engineer yourself, but rather about understanding enough of the engineering practices and challenges to effectively evaluate them. You don't need to know how to build an AI system from scratch, but you do need to understand the engineering principles that make it work.

At the foundation level, you're learning the basic building blocks of AI engineering. You're getting comfortable with how teams version control not just their code, but their data and models too. You understand why it matters that a team can trace exactly which dataset and which model version led to a particular output. You're learning about ML workflows – how a model goes from training to testing to deployment – and you're picking up the fundamental security concepts unique to AI systems. When a team tells you they're deploying a new model, you know what questions to ask about their testing procedures and rollback plans.

As you progress to the core level, you're dealing with the complexities of production AI systems. You understand sophisticated deployment patterns like canary releases and shadow deployments, where new models run alongside existing ones to validate performance before taking live traffic. You're evaluating how teams handle model monitoring and updates – not just whether they can detect issues, but whether they can respond effectively when things go wrong. You're looking at questions like how they handle data drift, or how they ensure model performance remains stable under different loads. You're also diving deeper into AI-specific security challenges, understanding how to protect models from extraction attacks or prompt injection vulnerabilities.

At the mastery level, you're working with teams at the frontiers of AI engineering. You're evaluating novel system architectures and helping teams navigate uncharted territories. When a team proposes using a mixture-of-experts architecture to deploy their large language model, you understand both the potential benefits and the hidden complexities. You can assess whether their routing mechanisms are robust, whether their fallback systems are adequate, and how their architecture will handle scale. You're thinking about problems we're still learning to solve – like how to maintain system safety during online learning, or how to implement reliable controls for systems that might develop new capabilities during operation, how to monitor safety of systems that have real physical effects. You're helping teams push boundaries while ensuring they maintain the rigorous engineering practices that keep AI systems safe and reliable.

Throughout this journey, you're building a practical understanding of how AI engineering decisions impact system reliability and safety. From basic deployment patterns to cutting-edge architectures, you're learning to evaluate not just whether a system works today, but whether it's built to remain reliable, secure, and safe over time.

Governance

The Governance domain is where our systematic thinking about what could go wrong meets our structured approach to preventing it. This isn't the traditional compliance world of annual assessments and static controls – AI systems demand a more dynamic, nuanced approach. In this domain, you’re first learning to put in place the basic foundations of governance, risk and compliance, then you're understanding much more complex and difficult to handle risks, and eventually you're building sophisticated systems for governance across multiple systems, sectors and countries.

At the foundation level, you're developing your risk radar. You're learning to spot the common ways AI systems can go wrong – from data drift that slowly degrades performance to feedback loops that amplify biases. When someone proposes deploying a new language model for customer service, you're thinking through the cascade of potential issues: What happens if it provides incorrect information? How could it inadvertently disclose sensitive data? What biases might it amplify? You're learning to design basic controls too, understanding the difference between preventive measures like input validation and detective controls like performance monitoring. Most importantly, you're learning that effective controls in AI aren't just about rules and restrictions – they're about designing systems that are inherently safer and more reliable.

Moving to the core level, you're working with sophisticated risk frameworks specifically designed for AI. You're not just identifying individual risks anymore – you're understanding how they interact and compound. You can look at a complex AI system and map out risk scenarios across different dimensions: technical risks like model drift, operational risks like deployment issues, and strategic risks like reputation damage. You're designing comprehensive control programs that adapt to changing conditions. When a team says they have "human oversight" of their AI system, you know to dig deeper: Do the humans have the right information to make decisions? Do they have the authority to override the system? Are they supported by tools and processes that make their oversight effective? You're also developing systematic approaches to measuring control effectiveness, understanding that in AI, controls often need to be as dynamic as the systems they protect.

At the mastery level, you're developing new approaches to AI governance and risk management. You're working with emerging AI capabilities that don't fit neatly into existing risk frameworks. How do you assess the risks of a system that might develop new capabilities during operation? How do you design controls for AI systems that interact with each other in complex ways? You're building governance frameworks that can adapt to emerging technologies while maintaining robust oversight. You're thinking about questions like how to maintain effective risk management when systems are constantly learning and evolving, or how to design meaningful human oversight for increasingly sophisticated AI systems. You might be developing new methodologies for testing control effectiveness, or creating frameworks for evaluating ethical risks in advanced AI systems.

Throughout this progression, you're building both the analytical rigor to assess complex risks and the practical wisdom to manage them effectively. You're learning not just to identify what could go wrong, but to work with teams to build systems that are more likely to go right. The journey takes you from understanding basic risk assessment to designing sophisticated governance frameworks that can adapt to the rapidly evolving landscape of AI technology.

This isn't just about compliance anymore – it's about building systematic approaches to ensuring AI systems remain safe, reliable, and aligned with human values as they become more powerful and complex. Each level of expertise adds new layers of understanding, from tactical risk management to strategic governance of emerging technologies.

Policy & Law

Policy & Law might seem like the most traditional of our domains, but in AI assurance, it's anything but business as usual. We're not just interpreting existing regulations – we're actively helping shape new ones as they emerge. You're not just learning the vocabulary of AI policy and law, but helping create it, bridging the gap between technical innovation and regulatory frameworks.

At the foundation level, you're building your regulatory literacy. You're learning to navigate the emerging AI regulatory landscape – understanding what the EU AI Act means when it talks about "high-risk systems," or how different jurisdictions approach algorithmic impact assessments. But this isn't just about memorising rules. You're learning to understand the intent behind regulations, because in AI, we often need to apply existing principles to entirely new situations. When a regulation talks about "transparency," you're learning to ask the right questions: Transparent to whom? In what way? For what purpose? You're also picking up the fundamentals of standards frameworks, understanding how they translate into practical requirements for AI systems.

As you advance to the core level, you're working with complex regulatory requirements across multiple jurisdictions and frameworks. You can look at an AI system and map out how different regulations intersect – how privacy laws interact with AI-specific requirements, how product safety regulations apply to autonomous systems, how consumer protection frameworks extend to algorithmic decision-making. You're not just following standards anymore – you're actively participating in their development, bringing practical experience to shape frameworks that work in the real world. When a team proposes a novel use of AI, you can help them navigate the regulatory landscape proactively, identifying requirements they'll need to meet and helping design compliance approaches that work with their innovation timeline rather than against it.

At the mastery level, you're operating at the intersection of emerging technology and evolving policy. You're helping shape regulatory approaches for capabilities we're still trying to understand. How should we regulate AI systems that can develop new abilities during operation? What does meaningful human oversight look like for increasingly autonomous systems? You're working across multiple legal domains, understanding how AI reshapes existing frameworks – where machine learning models intersect with medical device regulations, where neural networks meet product safety laws, where automated decisions collide with consumer protection requirements. You're not just interpreting requirements – you're helping create frameworks that can adapt to rapid technological change while maintaining robust oversight.

The journey in this domain is particularly dynamic because the landscape itself is constantly evolving. You're building the expertise to not just follow regulations but to help shape them, to translate between technical innovation and regulatory requirements, and to build bridges between different stakeholders. You're learning to think not just about compliance but about all-encompassing international governance – how to create frameworks that promote innovation while ensuring appropriate safeguards.

This isn't about being a lawyer who understands AI or a technologist who understands law – it's about developing the unique expertise needed to navigate and shape the intersection of these fields. Each level adds new dimensions to your understanding, from practical compliance to strategic policy development.

Bringing it all Together

Here's the most exciting thing about this framework – your journey through it will be uniquely yours. Just like every AI system presents its own challenges, every AI assurance professional develops their own blend of expertise. Maybe you'll find yourself drawn to the scientific challenges of evaluating large language models, or perhaps you'll excel at crafting governance frameworks for emerging AI capabilities. Some of us dive deep into the statistical intricacies of model evaluation, while others focus on building bridges between technical teams and regulators.

But here's where the real magic happens – in your ability as an AI Assurance Professional to bring these domains together. Think about it: data scientists might deeply understand model architecture, engineers know how to build robust systems, lawyers can interpret regulations, and auditors know how to assess controls. But as an AI assurance professional, you become the conductor who helps these specialists align and work together. You're the one who can understand enough of each discipline to ensure nothing falls through the cracks, to spot potential issues that might not be visible from any single perspective.

You’ll find all these 200 skills mapped out across 40 topics in four domains within the accompanying poster. Each skill builds from foundation to mastery, creating multiple pathways for your professional development. I’m sure it will evolve over time, and I’d love to hear your ideas and feedback.

In some future articles, I'll dive deeper into each of these domains, examining how these skills manifest in practice and exploring the rich resources available for developing them. We'll look at specific examples, discuss real-world applications, and share practical approaches for building expertise. I hope you find this a useful field guide to professional development in AI assurance – comprehensive enough to cover the breadth of what you might need, but flexible enough to adapt to your unique journey. By building your expertise across these domains, you'll be well-equipped to help ensure AI systems are developed and deployed responsibly, whatever challenges tomorrow brings.

Ready to dive in?