The New Profession of AI Assurance

Building safer AI at the intersection of science, engineering, governance, and law

When I stepped into the role of leading Responsible AI Assurance at Amazon Web Services just over a year ago, I had the benefit of two decades of experience leading engineering, governance and regulatory assurance programs at both Amazon and Microsoft. Working across healthcare, retail, finance, government and national security sectors, I had the opportunity to design, build, operate and assure cloud systems to meet demanding regulations and dozens of compliance frameworks for security, privacy and resilience. I had the rare privilege of building whole new hyperscale cloud regions from an idea on a whiteboard to existence in vast concrete and steel datacentres, then I took that infrastructure and operation through assurance processes fit for national security level data. And yet nothing quite prepared me for the unique challenges of AI assurance. I’ve felt this past year has been a real masterclass in how traditional assurance practices are evolving to meet our expectations of AI systems.

I've learned that while our foundational skills as assurance professionals in governance, security, privacy and resilience are invaluable, they're just the beginning of what we need. Through countless conversations with fellow assurance professionals, regulators, lawyers, scientists and engineers, I've witnessed a lot of uncertainty about how best to adapt and apply assurance practices in AI. I’ve also seen the exciting opportunities it presents for those of us seeking to grow our expertise and careers within this new profession. And it is truly a new profession, one that together we’re creating as we uniquely work at the intersection of science, engineering, governance, and law.

So what is AI Assurance?

It is the systematic process of evaluating, validating, and documenting that intelligent systems operate reliably, ethically, and safely for their intended purpose. AI Assurance provides evidence-based confidence that systems perform as expected, handle data appropriately, and make fair decisions. It provides the frameworks to manage risk and compliance throughout the lifecycle of their use.

In practice, this includes testing that AI systems meet technical standards for accuracy, reliability, and robustness; verifying that appropriate controls exist to manage risks; and demonstrating compliance with relevant laws, regulations and ethical principles. Think of it as quality control for AI, but instead of just checking if the system is secure and works as expected, we're verifying that it works responsibly, consistently, and transparently.

But AI Assurance professionals don't just verify systems—we architect trust into the foundations of those systems, their development and their use. We combine scientific rigour with ethical foresight to help ensure that AI systems are accurate, fair, transparent, and aligned with human values. Our job is ultimately about enabling the incredible potential of AI while ensuring it remains safe and trustworthy. It’s a profession that demands a breadth of knowledge, skill, experience, and above all curiosity that cuts across and connects specialised domains of science, engineering, governance and law.

In this series, I'll share practical insights from my journey, insights that I hope will help you build the expertise and confidence to enhance your own career, or help you to be as effective as possible as you lead similar initiatives in your own organisation.

I’d like to start by exploring five fundamental ways that AI is transforming the mindset and practice of assurance.

Scientific Foundations

Science first. Traditional information assurance operates in a world of relatively binary choices and clearly laid-out controls. Does the system implement required security measures? Yes or no. Are audit logs maintained according to policy? Check or fail. Is administrative access sufficiently restricted and data appropriately encrypted? These clear-cut decisions have served us pretty well in managing traditional IT risks.

But intelligent systems introduce a new dimension of complexity. For me, this past year culminated in achieving ISO42001 certification for AWS services1, we became the first large cloud provider to demonstrate independent conformance to this new standard. But when a standard like ISO 42001 expects us to ensure AI systems are "fair", we're no longer in the realm of simple compliance checking. Instead, we're conducting scientific analyses that require an understanding of statistical significance, confidence intervals, and performance thresholds across different populations and contexts.

While evaluating a large language model, we can't simply tick a box marked "unbiased" or “accurate”. We have to work with scientists to design and run experiments that test the model's performance across different demographics, languages, and use cases. Each assessment requires careful statistical analysis and nuanced interpretation of results. We make judgement calls. Some measures are trade-offs against others – such as the rate of false positives versus false negatives in an accuracy evaluation. We're becoming part auditor, part data scientist, needing to understand not just if something works, but how and why it works the way it does. And then we need to be able to explain those results and judgements to others.

Risk Management in Context

The shift in risk management might be even more profound. Traditional governance approaches take broad enterprise-wide risk assessments and turn them into directives for what become mostly uniform enterprise-wide controls: all systems must meet these security requirements; all data must be protected in these ways; all suppliers must undergo this screening. But AI assurance demands a more nuanced, use-case specific approach. One size does not fit all.

The risks identified mostly lead to design or dataset changes, not additional operational controls. For example, if a speech-to-text model is not sufficiently robust in noisy environments, the solution is most likely to add more data from those noisy environments. During our ISO42001 certification journey, we had to develop entirely new risk assessment frameworks that could adapt to each AI service's specific context and potential impacts. Each use case demands its own risk assessment and its own selection of risk treatments and controls.

Expectations of Transparency

Transparency in AI assurance goes far beyond conventional audit reports. While we're used to documenting control effectiveness and compliance status, the regulations and compliance frameworks related to AI systems require unprecedented openness about their inner workings. The EU AI Act2 introduces a tiered approach to transparency – recognising that a chatbot used for customer service requires different levels of disclosure than an AI system making lending decisions or medical diagnoses.

For high-risk systems, the requirements are extensive. The Act demands detailed documentation on training data, algorithmic design choices, and performance metrics. A healthcare AI system, for instance, must provide comprehensive evidence of its safety and reliability, including detailed records of its training data, testing methodologies, and known limitations. Compare this to a low-risk system like a basic recommendation engine, where the transparency requirements focus more on ensuring users know they're interacting with AI rather than the technical underpinnings.

This shift means creating new types of artifacts: model cards that detail system capabilities and limitations, methodology papers that explain testing approaches, and performance reports that break down system behaviour and evaluation metrics across different scenarios. For high-risk applications, these documents become part of a broader technical file that must be maintained and updated throughout the system's lifecycle. It's like moving from a simple product label to a detailed scientific paper – the level of detail and rigor required increases dramatically with the potential impact of the system. It is the work of AI Assurance professionals to collaborate with scientists, engineers, and lawyers to develop mechanisms to achieve this heightened level of transparency.

This isn't just about regulatory compliance; it's about building trust through transparency. When an AI system is making decisions that significantly affect people's lives, they have a right to understand not just what the system does, but how it works and what safeguards are in place to protect their interests.

New forms of Evidence

The nature of evidence itself is transforming. Traditional system logs, operating procedures and configuration screenshots are being supplemented with Jupyter notebooks, statistical analyses, stakeholder impact assessments and mathematical proofs. To demonstrate compliance with requirements for model robustness, you might need to provide detailed notebooks showing adversarial testing results, complete with statistical analyses and methodology explanations.

One significant evolution in evidence relates to system impact assessment – a new kind of comprehensive analysis that tells the full story of how an AI system might affect different stakeholders. A thorough impact assessment examines those impacts across multiple dimensions. For a hiring AI system, we're not just looking at accuracy rates – we're studying how it might affect different demographic groups, whether it could inadvertently perpetuate historical biases, and how it might change workplace dynamics over time. We need to consider both direct effects (who gets hired) and indirect ones (how it might change the way people apply for jobs or develop their careers).

This requires a different kind of thinking than traditional assurance work. We need to blend quantitative analysis (performance metrics, statistical tests) with qualitative understanding (user interviews, scenario planning). We might discover that a system with excellent technical metrics could still have problematic real-world impacts, or that minor technical issues might have outsized effects on vulnerable populations.

Creating these assessments demands both breadth and depth of expertise. While you don't need to become a data scientist, you need to understand enough to evaluate and validate technical evidence effectively. You likely don't need to run the tests yourself, but you need to know enough to evaluate and represent their validity when scrutinised. Similarly, you need to grasp social science research methods, understand basic principles of ethics and fairness, and be able to think systematically about complex societal systems.

The result is a new kind of evidence that combines technical rigour with societal awareness. These impact assessments become living documents, updated as we learn more about how systems behave in the real world and how different stakeholders are affected. They represent a fundamental shift from simply documenting what a system does to understanding its role and influence in the broader human context.

The Regulatory Sprint

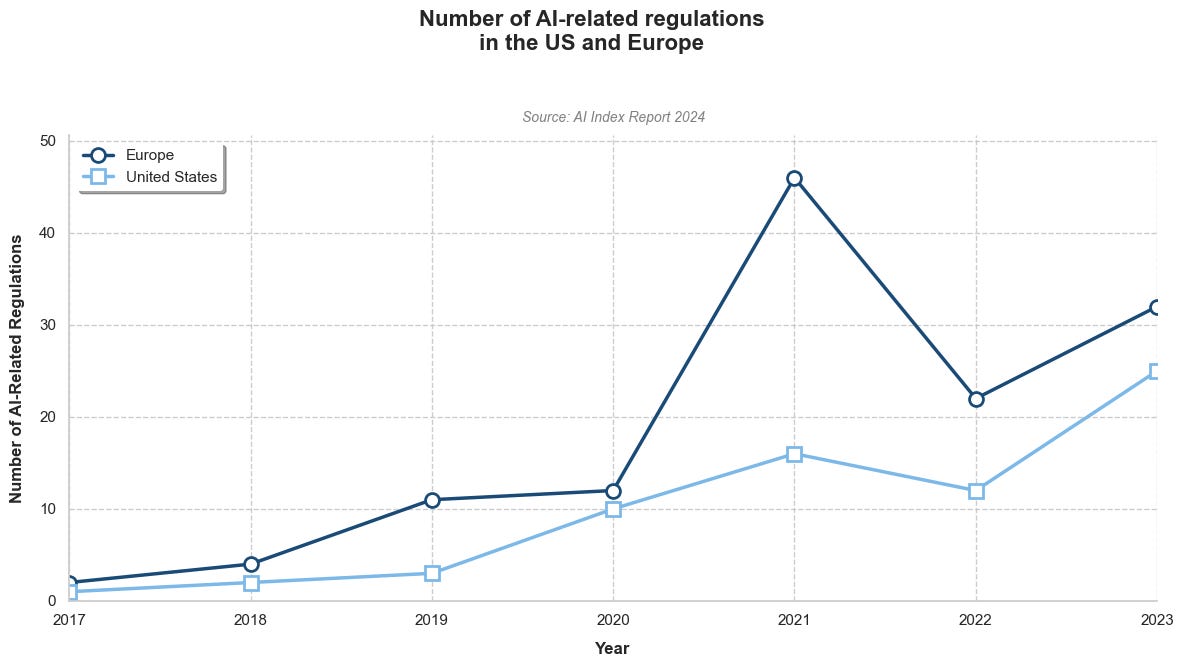

The regulatory landscape for AI is evolving faster than any technology domain I've seen in my career. Lawmakers and regulators and proposing and enacting rules related to AI at an extraordinary, and accelerating pace3. Imagine trying to build a house while the building codes are being rewritten monthly – that's what AI assurance feels like today.

The EU AI Act stands as the most comprehensive framework, introducing detailed requirements for AI systems, particularly around foundation models. But it's just one piece of a complex puzzle. In the United States, the NIST AI Risk Management Framework provides federal guidance, while states like Colorado and California are developing their own AI regulations.

The complexity multiplies across sectors. In the US, healthcare organisations must navigate both HIPAA requirements and the FDA's evolving guidance on AI as a medical device4. Financial institutions balance SEC requirements with Federal Reserve guidance on AI in lending5. Critical infrastructure providers face Department of Energy standards for AI system reliability.

Australia is actively developing regulatory frameworks to ensure the safe and responsible use of artificial intelligence. In September 2024, the Australian Government introduced a set of Voluntary AI Safety Standards6, comprising ten guardrails designed to guide organisations in the ethical deployment of AI systems. These guardrails broadly align with international standards, but not exactly. In parallel, the Australian government proposed Mandatory Guardrails for AI in High-Risk Settings7, targeting AI systems that pose significant risks to individuals or society. These mandatory guardrails closely mirror the voluntary standards and are currently under consideration for implementation.

What makes this particularly challenging is how these requirements evolve. Take model drift monitoring – the EU AI Act requires it, but what constitutes acceptable drift varies by use case and sector. A financial trading algorithm might need near-real-time drift detection, while a content recommendation system might allow for weekly assessments.8

This rapid evolution demands a new kind of regulatory agility. We can't just implement controls and move on. Instead, we're building flexible frameworks that can adapt as requirements change. For example, bias assessment protocols need to include customisable metrics that can be adjusted based on local regulatory definitions of fairness (because perhaps unsurprisingly, there is no common agreement on what fairness really means).

The role of AI assurance professionals has evolved accordingly. We're not just compliance checkers – we're becoming regulatory translators and strategists. We track developments across jurisdictions, anticipate regulatory trends, and build adaptable governance frameworks that can evolve with the landscape.

This means staying current with an ever-growing body of guidance: international standards like ISO42001; regional frameworks like the EU AI Act; national guidelines like NIST's AI RMF9; sector-specific requirements from regulators; local and state regulations like New York City's Local Law 14410 that requires bias audits for AI hiring tools; industry best practices and voluntary frameworks, like MITRE's ATLAS11 (Adversarial Threat Landscape for Artificial-Intelligence Systems).

More importantly, we need to understand how these requirements interact and overlap. A single AI system might need to satisfy EU transparency requirements, US sector-specific regulations, and local ethical AI guidelines simultaneously along with all the pre-existing security, privacy and resilience requirements.

The key to success in this environment isn't just knowing the requirements – it's building governance approaches that can flex and adapt as the landscape evolves. We're creating automated documentation systems, modular control frameworks, scalable testing methodologies, and mechanisms for continuous verification that can incorporate new requirements without requiring complete redesigns.

Looking Ahead

As assurance professionals navigating this transformation, it’s clear that our foundational skills in information assurance remain very valuable. The systematic thinking, attention to detail, and understanding of risk management practices and control frameworks continue to serve us well. But we need to augment these with new skills so that we can be active contributors to scientific analysis, technical evaluation, and contextual risk assessment.

In my next article, I'll explore some of the critical skills needed for AI assurance and start to lay out a roadmap for professional development in four critical domains: scientific knowledge, engineering understanding, new governance practices, and policy understanding. Covering over 80 discrete skills, I'll break down each one with practical steps and resources to learn and apply.

I hope you’ll join me on the journey.

https://aws.amazon.com/blogs/machine-learning/aws-achieves-iso-iec-420012023-artificial-intelligence-management-system-accredited-certification/

https://eur-lex.europa.eu/eli/reg/2024/1689/oj/eng

https://aiindex.stanford.edu/report/

https://www.fda.gov/medical-devices/software-medical-device-samd/artificial-intelligence-and-machine-learning-software-medical-device

https://files.consumerfinance.gov/f/documents/cfpb_automated-valuation-models_final-rule_2024-06.pdf

https://www.industry.gov.au/publications/voluntary-ai-safety-standard/10-guardrails

https://consult.industry.gov.au/ai-mandatory-guardrails

https://encord.com/blog/model-drift-best-practices/

https://www.nist.gov/publications/artificial-intelligence-risk-management-framework-ai-rmf-10

https://www.cdiaonline.org/wp-content/uploads/2021/11/NYC-Local-Law-144.pdf

https://atlas.mitre.org/

Thank you for this. Having gained AI governance certification and worked in health tech for 25 years, I am keen to learn how to develop my career into ai assurance: your introductory podcast has me hooked ! Can’t wait for more episodes.

Great post! I've been receiving your posts from different people in my network lately. When I visited your Substack to read the latest one, I decided instead to start from the very beginning. I'm planning to read each article, as it's clear that the insights come from firsthand experience—filled with excitement, hard work, curiosity, and passion.