Building your AI System Inventory

A practical framework for mapping out your AI System Inventory in terms of systems, capabilities, use cases, users and stakeholders.

In previous articles, we explored why high-integrity assurance matters, built the business case for AI governance, and unpacked the essential components of an AI Management System (AIMS). Now it's time to move from theory to practice – to begin the tangible work of building your AIMS. The foundation of this work starts with a clear understanding of your AI landscape in an AI System Inventory.

AI systems can be surprisingly elusive. They might be embedded in vendor software, hidden within automation tools, embedded as a remote service, or running as experiments in different departments. Some may not even be recognised as AI by the teams using them. So, our first task is to define a scope and map out the AI systems we’re dealing with. After all, you can't govern what you can't see.

I’ll approach this in two parts. First, we'll explore how to systematically map the terrain – understanding where AI exists in your organisation through the business lens of use cases, capabilities, and systems. Then, in a second article, we'll dive deeper into documenting what's inside each system – the data, models, interfaces, and agents that make them work. Together, these create your AI inventory, the foundation upon which all other aspects of your AIMS will build.

Quick Sidebar: The EU Definition of an AI System

Like many others, I was a little bemused to see the level of ambiguity in the European Union’s newly published definition of an AI System1. But I was also surprised by criticism of the definition paper. The truth is that even AI experts often fundamentally disagree about what constitutes "artificial intelligence."

This isn't surprising. AI isn't like a car or a computer - discrete objects with clear boundaries. It's more like "transportation" or "communication" - a broad concept that encompasses many interrelated technologies and approaches. Some see AI primarily through the lens of machine learning and neural networks. Others focus on knowledge representation and reasoning systems. Still others emphasise the role of natural language processing or computer vision. Each perspective captures something true about AI while missing other important aspects.

Given this inherent complexity, I think we're asking too much if we expect regulators to produce a perfect, universally agreeable definition. The EU's attempt somewhat inevitably blurs lines between advanced analytics, traditional rule-based systems, and modern machine learning approaches. This ambiguity isn't necessarily a failure of drafting - it may simply reflect the genuine difficulty of precisely defining something as multifaceted as AI.

But as engineers, scientists and assurance professionals, we don't need to wait for perfect regulatory definitions to do our jobs effectively. What matters more is that we clearly understand and can articulate what we mean by an AI system in our specific context. When we're building safety-critical systems that make consequential decisions affecting human lives, we need practical frameworks for identifying AI components, mapping their capabilities and interactions, and implementing appropriate controls - regardless of how they might eventually be classified under various regulations.

I don’t mean to dismiss the importance of regulatory frameworks - they serve a vital role in establishing minimum standards and accountability. But rather than getting caught up in definitional debates, we should focus on the substantive work of building robust, responsible AI systems. After all, a self-driving car won't become safer because we've perfectly categorised it under a regulation. It becomes safer through rigorous engineering, careful testing, and thoughtful consideration of potential failure modes.

The real challenge isn't defining AI - it's ensuring that we develop and deploy AI systems responsibly, with appropriate safeguards and controls. That's work we can and should be doing today, even as the regulatory landscape continues to evolve. Let the lawyers and bureaucrats work on precise legal definitions. Our job is to build systems that are safe and reliable, regardless of how they're ultimately classified.

Drawing a boundary around your scope of work

Think of mapping your organisation's AI landscape like creating a detailed atlas. But just as an explorer doesn't start by mapping an entire continent at once, but rather begins with a specific region and gradually expands outward, documenting AI systems requires a thoughtful, systematic approach that starts with clear boundaries and a narrow scope.

This scope could be as focused as a single critical AI system that makes consequential decisions, or as broad as every AI-enabled capability across a global enterprise. The key is choosing a scope that's both meaningful and manageable. A healthcare provider might start by mapping just their radiology department's AI systems before expanding to other clinical areas, a bank might focus on client-facing use of AI for retail banking, a cloud application provider may focus on just one or a small number of services (as we did in Amazon). This focused approach allows you to develop your mapping methodology and governance approach with a clear set of stakeholders before scaling across the organisation.

For now, let's focus on defining that initial scope – drawing the boundaries of the territory you'll map. The scope needs to be clear enough that everyone understands what's included and what isn't. Avoid the temptation to include "all AI systems," - it is much better to start with a refined narrow scope with a clear boundary that aligns with your immediate governance priorities. Be mindful this isn't an academic exercise and you don’t perform it on your own; it's a crucial conversation with sponsors and stakeholders.

When defining scope, consider these questions: What business units or departments are included? Which types of AI systems matter most for your initial governance efforts? Are you including both internally developed and vendor-provided AI? Importantly, do you have the access and authority needed to effectively map everything within your chosen boundaries?

Start with a scope that's ambitious enough to matter but contained enough to succeed. You can always expand later but starting too broad risks creating an incomplete or superficial inventory.

Use cases, capabilities and systems

Once you've defined your scope, it's time to start mapping out your AI systems, their capabilities, and how they're actually used in practice.

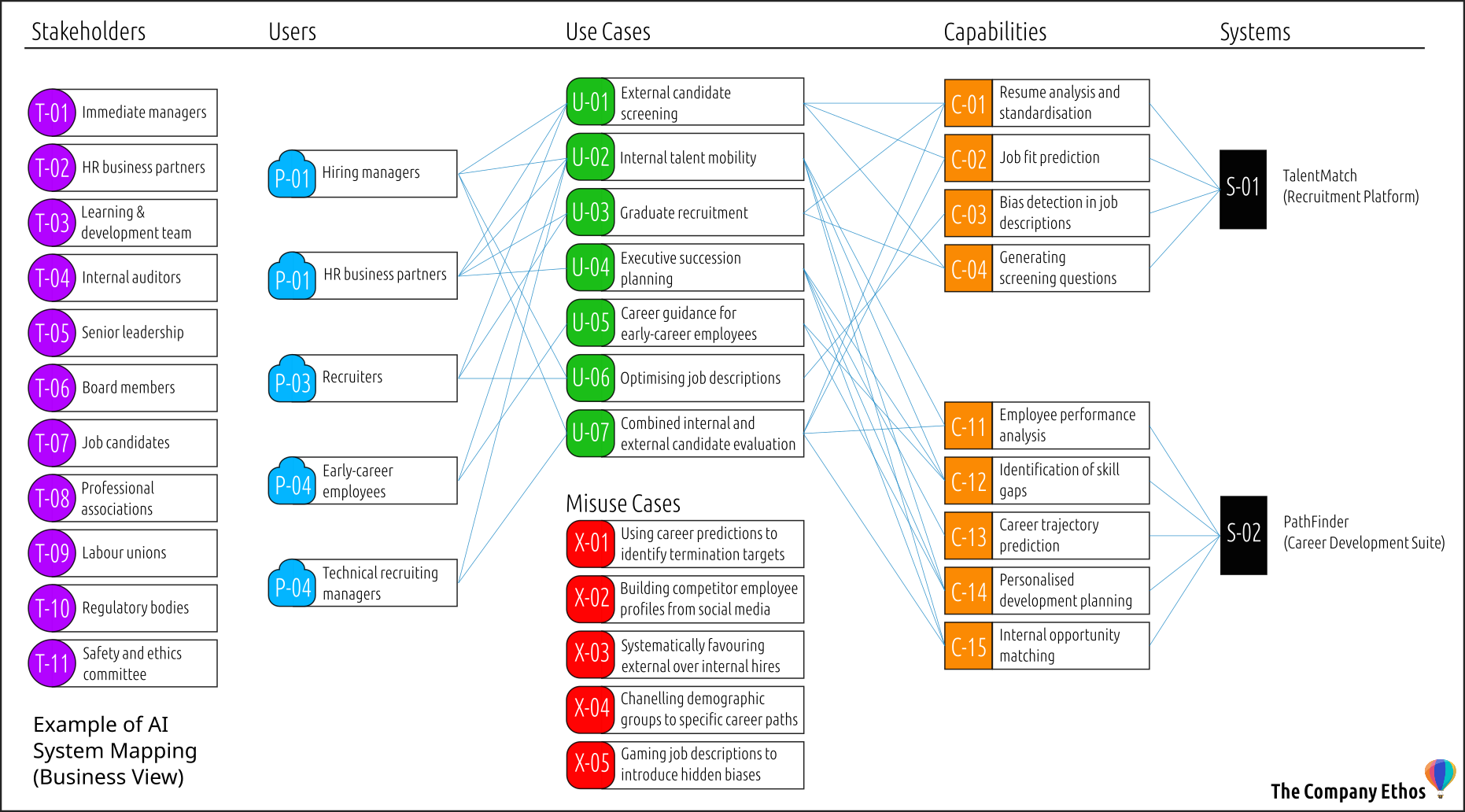

I’ll use an illustrative example related to talent management, a space where AI often operates in ways that aren't immediately visible. Imagine a large organisation that has invested in two sophisticated AI systems: a recruitment platform we'll call TalentMatch and a career development suite called PathFinder. At first glance, these might seem like separate tools for distinct purposes. But when we map them properly, we discover they share capabilities that create interesting overlaps and dependencies in how they're used.

TalentMatch, as a system has several key capabilities. It can analyse resumes to extract and standardise candidate information, predict job fit based on historical success patterns, detect potential biases in job descriptions, and generate preliminary screening questions tailored to each role. Think of these capabilities as building blocks that can be assembled in different ways to serve various purposes.

PathFinder, meanwhile, brings its own set of capabilities. It can analyse employee performance data to identify skill gaps, predict career trajectory based on learning patterns, generate personalised development plans, and match employees with internal opportunities. Some of these capabilities, like skills analysis and role matching, overlap with TalentMatch but are tuned for internal rather than external candidates.

Now here's where it gets interesting – when we look at how these capabilities are actually used in practice, we find use cases that often bridge both systems. Consider a talent mobility program where a department needs to fill several positions. The hiring manager might use TalentMatch's job description analysis to ensure the role is described fairly, while simultaneously using PathFinder's internal matching to identify current employees who could grow into the role. The use case here isn't just "fill a position" but rather "optimise talent placement across internal and external candidates."

For each use case, we need to document several critical elements. First, who are the users? In our example, this might include hiring managers, HR business partners, and employees exploring career moves. What decisions are being influenced or automated? This could range from candidate shortlisting to career path recommendations. What's the impact scope – how many people are affected, how consequential are the decisions, what happens if something goes wrong?

We also need to understand the context of each use case. Is it part of a regulated process, like ensuring fair hiring practices? Does it handle sensitive data, like employee performance reviews? Are there specific compliance requirements or ethical considerations? A use case involving graduate recruitment might have different risk tolerances than one involving executive succession planning.

The capabilities themselves need careful documentation too. For each capability, we should try to understand its maturity level – is it experimental or proven? What are its known limitations? What data does it require to function effectively? For instance, TalentMatch's bias detection capability might need a diverse historical dataset to work reliably, while PathFinder's career trajectory predictions might need to account for industry-specific career paths.

The interplay between capabilities is particularly important to map. When PathFinder suggests an internal candidate for a role, does it use the same job analysis capabilities as TalentMatch? If one system's capabilities are updated or retrained, how does that affect use cases that depend on both systems? These interdependencies often reveal themselves only through careful mapping.

At the system level – which we'll explore in depth in the second article – we need to understand enough to see how capabilities are implemented and where they might interact. Are TalentMatch and PathFinder sharing data? Do they use consistent definitions for skills and competencies? Are there technical dependencies that could affect multiple use cases if one system experiences issues?

Sidebar – Key Definitions

🎯A Use Case is a specific situation where AI technology is applied to achieve a business objective. It's not just what the AI does, but why and for whom. When PathFinder suggests career paths to an employee, that's a use case. What makes it a use case rather than just a feature is that it involves real people, specific contexts, and measurable outcomes. A use case tells us what success looks like in practical terms.

💪A Capability is a distinct function that AI can perform – think of it as a specialised skill. Resume analysis, for instance, is a capability. What distinguishes a capability from a mere feature is that it can often be applied across multiple use cases. The same natural language processing capability that analyses resumes might also analyse job descriptions or performance reviews. Capabilities are the building blocks that, when combined, enable our use cases.

⚙️A System is the actual technological implementation that delivers these capabilities. TalentMatch isn't just software – it's a complete system encompassing models, data pipelines, interfaces, and agents all running on some form of physical infrastructure. What makes something a system rather than just a collection of capabilities is that it's a cohesive, deployable unit that can operate independently, even though it might integrate with other systems.

👩💼A User is someone who directly interacts with the AI system to achieve their goals. The key here is direct interaction – what distinguishes users from other participants is their hands-on engagement with the system. They make inputs, receive outputs, and actively participate in the AI-driven process.

👀A Stakeholder is anyone affected by or interested in the AI system's operations and outcomes, whether they interact with it directly or not. What makes someone a stakeholder is their vested interest in the system's impact, not their level of interaction with it. A regulatory body overseeing fair hiring practices is a stakeholder even if they only review audit reports.

🚫A Misuse Case is a scenario where the system's capabilities could be exploited or misapplied, either intentionally or inadvertently, to achieve outcomes that weren't intended or that could cause harm. What distinguishes a misuse case from a mere risk is that it describes a specific, plausible scenario. A misuse case describes a concrete way the system could be weaponised against its intended purpose.

This mapping process often reveals surprising insights. You might find a use case taking advantage of the same underlying AI capabilities but with different thresholds and criteria, leading to inconsistent outcomes. Only by mapping the relationships between systems, capabilities, and use cases did this become visible.

The goal isn't just to create a static inventory but to understand the dynamic relationships. When a new use case is proposed, you can quickly see what capabilities already exist that might be leveraged. When a capability needs to be updated, or a system behaviour is observed to have drifted, you can trace which use cases might be affected. This understanding becomes invaluable as your AI landscape grows more complex.

Understanding users and stakeholders reveals layers of complexity that might not be immediately obvious. In our example, when we map out who's involved in these AI-driven talent decisions, we find concentric circles of impact that extend far beyond the immediate users of the systems.

Consider a specific use case where PathFinder suggests career development opportunities to early-career employees. The direct user is the young professional accessing career guidance through the platform. But the stakeholders? That web extends much further. Their immediate manager has a vested interest in their development path. HR business partners need to ensure the guidance aligns with organizational policies. The learning and development team relies on these recommendations to plan training programs. Senior leadership has stakes in succession planning and talent retention. Even the company's board might be stakeholders if the system affects diversity and inclusion metrics they're accountable for reporting.

TalentMatch presents an even more complex stakeholder landscape. When it screens resumes for job fit, the immediate users are recruiters and hiring managers. But candidates – who never directly interact with the system – are profound stakeholders in its decisions. Professional associations might have interests in how their certifications are weighted. Labour unions could be stakeholders in how the system evaluates experience versus formal qualifications. Regulatory bodies overseeing fair hiring practices are stakeholders, even if they're unaware of specific implementations.

This web of stakeholders becomes crucial when we consider potential misuse scenarios – ways these systems could be exploited or misapplied, either intentionally or inadvertently. Imagine a manager who discovers they can use PathFinder's career trajectory predictions to identify employees likely to leave the company within the next year. While the capability was designed to suggest development opportunities, it could be misused for pre-emptive terminations. Or consider how TalentMatch's skill extraction capability could be misused to build detailed profiles of competitors' employees by running their public LinkedIn profiles through the system.

Subtle misuse scenarios can emerge around the shared capabilities of multiple systems. A hiring manager could use PathFinder's internal candidate assessment in combination with TalentMatch's external candidate screening to systematically favour external hires – perhaps incentivised by a recruitment agency relationship. The AI systems wouldn't flag this as problematic because each individual use appears legitimate. PathFinder's career guidance could be misused to systematically channel employees from certain backgrounds toward specific career paths, creating what appears to be self-selected segregation but is actually guided by embedded biases in historical career progression data. TalentMatch could be vulnerable to gaming as well. A savvy recruiter could use its bias detection capability in reverse – iteratively adjusting job descriptions not to eliminate bias, but to find language that appears neutral while still favouring certain candidate profiles. Or recruitment agencies could systematically test resumes against the system to optimize their candidates' chances, creating an unfair advantage.

These misuse scenarios underscore why mapping stakeholders is crucial for governance. Each stakeholder brings unique perspectives on potential misuse. Labour representatives might spot exploitation risks that technologists miss. An internal auditor might identify regulatory vulnerabilities that users consider harmless optimisations. Safety and ethics committees might surface subtle biases that neither users nor technical teams anticipated.

By documenting these relationships early – mapping not just who uses the systems but who's affected by them, and imagining how they might be misused – we build a foundation for more robust governance. When we later design controls and monitoring systems, we'll know whose interests need protection and what patterns might indicate misuse.

This mapping also reveals where we need to build in transparency and accountability. For instance, if we know professional associations are stakeholders in how credentials are evaluated, we might need specific audit trails for credential-related decisions. If we've identified potential misuse through combined system capabilities, we might need cross-system monitoring that neither system would require on its own.

Building your AI Inventory step-by-step:

Let me walk you through how to systematically document your AI landscape in a way that's both thorough and maintainable. Think of this as creating a map that connects all the pieces of your AI ecosystem while capturing their relationships. We’re focusing on the business and usage layer here remember, we will come to the technical mapping in the next article.

1️⃣ Start with a simple spreadsheet – yes, a spreadsheet. While you might eventually migrate to more sophisticated tools, a well-structured spreadsheet provides the flexibility and accessibility needed in these early stages. Create separate tabs for Systems, Capabilities, Use Cases, Users, and Stakeholders. This becomes your master inventory document.

2️⃣ On your Systems tab, begin with just the basics: system name, owner, vendor (if applicable), and a brief description. Don't dive deep into technical details yet – we'll do that in the next phase of our inventory.

3️⃣ Next, move to your Capabilities tab. Here's where you'll list every distinct AI capability these systems provide. Give each capability a unique identifier – something like C-01 for resume analysis or C-02 for job fit prediction. For each capability, note which system or systems implement it. This is where you might start seeing overlaps.

4️⃣ The Use Cases tab is where things get interesting. Create columns for the use case name, description, and primary business objective. You can add columns for risk level, compliance requirements, and data sensitivity (but don’t worry too much right now about completing those if you don’t have information yet).

5️⃣ Now go to the Users tab, listing every type of user who directly interacts with these AI systems. Include their role, department, and the use cases they engage with. Be specific – "hiring manager" may be too broad; "technical recruiting manager for engineering" tells a more useful story.

6️⃣The Stakeholders tab requires careful thought. List every group or entity affected by or interested in each use case, even if they never touch the systems directly. Include columns for stakeholder type (internal/external), their interest or concern, and how they're impacted.

7️⃣ Here's the crucial part – and maybe the only tricky part of using a spreadsheet for this mapping exercise. It can help at this stage to put all the elements so far mapped on Post-it notes and connect them on a whiteboard. Then go back to the Use Cases tab and create relationships that map the connections between all these elements. Use a simple matrix format: use cases are in rows, so just have columns for the capabilities they use, their direct users, and their stakeholders. This becomes your go-to reference for understanding the ripple effects of any changes to your AI systems.

8️⃣Now the final step. Add a Misuse Cases tab. Starting from each use case and capability, brainstorm potential ways the system could be misused, whether intentionally or accidentally. Document the scenario, its potential impact, which capabilities could be exploited, and which stakeholders and users would be affected.

9️⃣Update this document regularly – at minimum, whenever new systems are added or existing ones are significantly modified. Schedule quarterly reviews with system owners and key stakeholders to validate the information and identify any changes in how systems are being used. Pay special attention to "capability creep" – when systems are used in ways that weren't originally intended.

This living document becomes the foundation for your entire AI governance framework. It informs your risk assessments, guides your monitoring strategies, and helps ensure you're not missing critical stakeholder considerations in your governance decisions. By maintaining it diligently, you create a clear picture of your AI landscape that supports both innovation and responsible governance. I recommend drawing it on out on a poster, paste it on your wall for everyone to see and comment upon - they’ll happily provide insights and corrections to continuously improve.

Keep track of changes with a version history. When updates occur, note what changed and why. This creates an audit trail that helps you understand how your AI landscape evolves over time. It also helps identify patterns, like if certain use cases frequently change or if new misuse scenarios keep emerging around particular capabilities.

Most importantly, use this documentation actively, not just for compliance. When someone proposes a new use case, consult your inventory to identify existing capabilities that could support it. When updating a system, check the relationships tab to understand the full scope of impact. When conducting risk assessments, use the misuse scenarios as input for your threat modelling.

So now you have a map of organisation's AI landscape focusing on the relationships that matter most – how AI systems deliver capabilities, how those capabilities enable real-world use cases, and how these use cases affect both direct users and broader stakeholders. You have a systematic way to understand and document the functional elements of your AI ecosystem, from the high-level business objectives down to potential misuse scenarios that need monitoring.

In our next article, I'll take dive deeper to map out the internal architecture of AI systems – the data that feeds them, the models that drive their decisions, the interfaces through which they interact, and the autonomous agents they may deploy. This technical mapping is crucial because it reveals the hidden connections and dependencies that could affect multiple use cases simultaneously. A data quality issue in a shared training dataset might ripple through multiple AI capabilities in unexpected ways. By combining both views – the business landscape we've mapped here and the technical infrastructure we'll explore next – we'll build a complete picture that supports effective governance at every level.

https://digital-strategy.ec.europa.eu/en/library/commission-publishes-guidelines-ai-system-definition-facilitate-first-ai-acts-rules-application

James, you’re producing such amazing content. This is helping me so much as I pivot my career to AI GRC. A huge THANK YOU!

Great article. Which control would u classify this on the megamap