Are you building a High-Risk AI System?

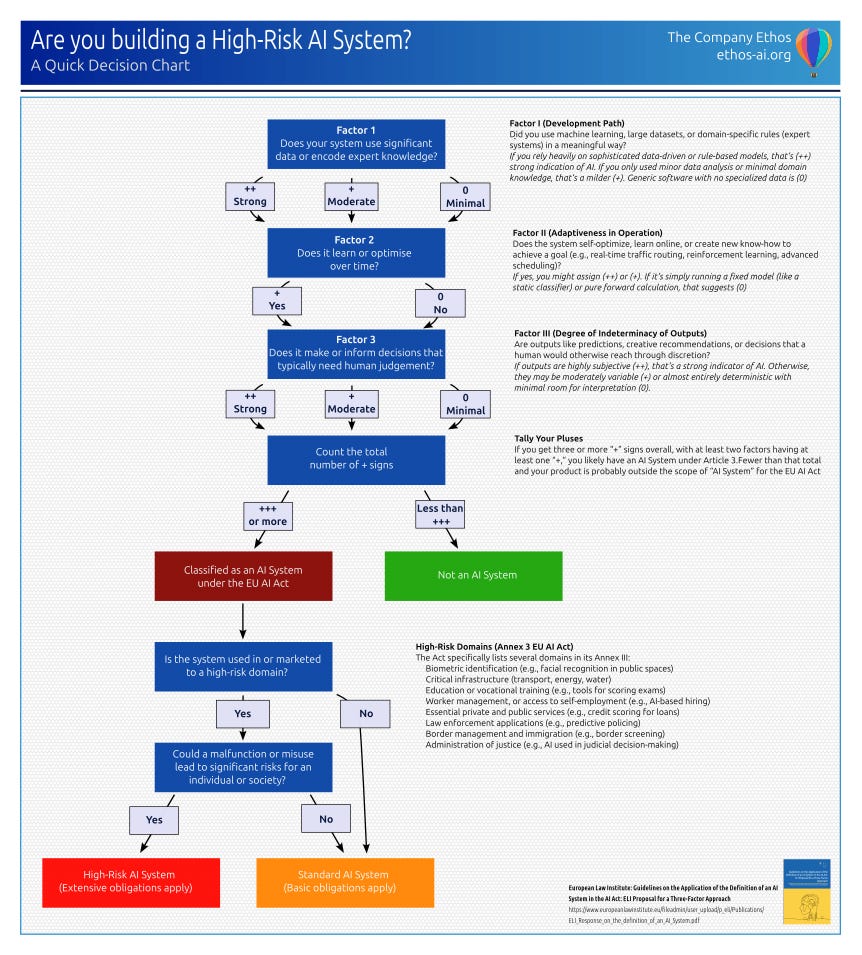

Your software is AI-powered, but does that mean it's a 'High-Risk AI System' under the law. It is crucial to know in advance if it is. Here's a decision chart that can help.

So you’re building software that uses machine learning, you’re developing it with the help of AI, and it helps your customers or employees make intelligent decisions. But is it an “AI System” under new or emerging regulations like the EU AI Act? And if so, is it ‘High-Risk’ and subject to more rigorous regulatory compliance requirements? The answers may surprise you, and they’re not clear cut. So leveraging some great research from the European Law Institute1, I put together a simple flow chart that can help.

The distinction really does matter, especially if you’re a startup launching a new product or service. If your software qualifies as an "AI system" under these new laws, regardless of whether you have a single user or a million users, you'll need to meet requirements around safety, transparency, and risk management. And although the EU is driving these definitions and requirements now, their approach is influencing regulations worldwide, so other countries will tend to follow or at least build upon what they define as high-risk AI.

A quick refresher on the definition of a high-risk AI System

Article 3(1) of the EU AI Act defines an “AI system” as:

“A machine-based system that is designed to operate with varying levels of autonomy and that may exhibit adaptiveness after deployment, and that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments.”

In simpler terms, if you’re building something that:

Runs on machines (software, hardware, or both);

Operates with some level of autonomy (even partially) and may update or change (adaptiveness);

Takes inputs of data and, from that, influences outputs that affect people or systems—

…it likely meets the Act’s definition of an AI system. However, this definition is so broad that it could be interpreted to rope in many different types of software.

An AI system is considered “high-risk” if: (a) a malfunction or misuse could lead to significant risks for individuals or society; and (b) it is marketed or used in a domain with heightened direct impact on health, safety, or fundamental rights, such as2

Biometric identification (e.g., facial recognition in public spaces)

Critical infrastructure (transport, energy, water) where a malfunction could endanger health or safety

Education or vocational training (e.g., tools for scoring exams)

Worker management, or access to self-employment (e.g., AI-based hiring)

Essential private and public services (e.g., credit scoring for loans)

Law enforcement applications (e.g., predictive policing)

Border management and immigration (e.g., border screening)

Administration of justice (e.g., AI used in judicial decision-making)

These definitions are both so broad that they could inadvertently rope in systems and uses that are not inherently of concern. High-risk AI systems are subject to stricter rules under the EU AI Act, including:

Risk Management: Ongoing, systematic evaluation of potential harms or biases the system could produce.

Data Governance and Documentation: Detailed record-keeping on training data, validation sets, model assumptions, etc.

Transparency and Logging Obligations: Clear instructions or disclaimers for users, plus robust logging to support auditing.

Human Oversight Requirements: Ensuring humans can meaningfully monitor and intervene if the system malfunctions or generates undesirable outcomes.

Post-Market Monitoring: Tracking real-world performance to catch issues early, with potential mandatory reporting obligations.

Now reading the 50,000+ words of the EU AI Act to figure out if your next great startup idea or application for your team is no easy feat (or a great return on your time investment). So instead, we need a quick cheatsheet to help qualify if your application is a high-risk AI system from the outset.

A simple decision flow-chart

Ok, so here’s the quick and easy flowchart I now use to cut-through the abstract and answer the two crucial questions:

Is my system considered an ‘AI System’ under the regulations?

If it is an AI system, is it ‘high-risk’?

The European Law Institute (ELI) work really helps here, with a framework evaluating an AI System along three main factors:

Data/Domain-Specific Knowledge (Factor I): How much data, statistical analysis, or domain expertise went into building it? If developers used vast datasets or encoded complex, specialised knowledge (like in expert systems), that pushes the system closer to being AI.

Creation of New Know-How During Operation (Factor II): Does the system generate fresh insights or optimise itself while running, possibly using machine learning or search algorithms? Agentic systems would score highly on this factor while recommender engines that adapt based on real-time feedback would have a more moderate score.

Formal Indeterminacy of Outputs (Factor III): Are the system’s outputs the kind of things (e.g., recommendations or creative content) that humans would otherwise generate by exercising some level of discretion or judgment? If yes, that also nudges the software toward the AI label.

The ELI approach has a model where if a system collectively scores at least three “pluses” across these factors, it likely is an ‘AI System’. For instance, large-language-model chatbots often use massive datasets (Factor I: ++), rely on forward calculations (Factor II: 0), and produce outputs that require subjective judgment (Factor III: ++). That sums to three “+”—enough to qualify as an AI system. You can read the full details with lots of examples in the ELI paper to help on borderline cases.

Figuring out if the system is "high-risk" seems more straightforward. First, ask yourself if it will be marketed or used in an area of life where mistakes could seriously impact people's safety, rights, or opportunities. Think healthcare, education, law enforcement, job hiring, or critical infrastructure. But just working in these domains isn't enough on its own.

The second condition is that the AI system needs to play a significant role in making important decisions or assessments within that domain. For example, an AI system that helps schedule hospital cleaning crews probably isn't high-risk, even though it's used in healthcare. But an AI system that helps diagnose patients or recommend treatments? That's definitely high-risk, because its decisions directly affect patient safety.

You can download the decision-chart here:

By mapping out your AI system with these criteria—and documenting the results—you’ll have a clearer sense of whether your next great idea is high-risk and, if so, to start thinking about the EU AI Act’s heightened standards. Keep in mind this is still a generalised tool—nothing replaces the expertise of an AI assurance professional analysis or legal advice where edge cases are involved. If you’re on the borderline (e.g., your system uses advanced analytics but not true machine learning, or you’re partially in a high-risk domain), you may want expert input to confirm your interpretations.

If you find this useful, you may like to read an article on the broader skills map of an AI Assurance professional

https://www.europeanlawinstitute.eu/fileadmin/user_upload/p_eli/Publications/ELI_Response_on_the_definition_of_an_AI_System.pdf

https://artificialintelligenceact.eu/annex/3/

Does this flowchart change now that there are specific guidelines from EU on what is classified as AI and what is not?