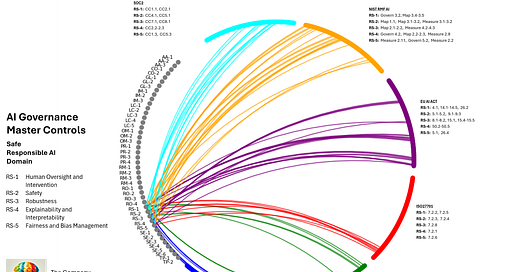

AI Governance Mega-map: Safe, Responsible AI and System, Data & Model Lifecycle

Diving into the two control domains most important to safe, responsible AI for our mega-map of AI Governance drawing from ISO420001/27001/27701, SOC2, NIST RMF AI and the EU AI Act.

We've covered a lot of ground - from the foundational domains of leadership and risk management through to the technical safeguards of security and privacy. But now we arrive at the intersection where technical innovation meets ethical responsibility.

First, we'll explore Safe & Responsible AI - the controls that ensure AI systems don't just perform well, but also in ways that benefit society while minimising potential harms. Think of these as guardrails that keep AI innovation on track while preventing unintended consequences.

Then I'll go through System, Data & Model Lifecycle controls - the practical mechanisms that maintain quality and accountability from initial data collection through to model retirement. This is where theoretical principles meet day-to-day reality, where we ensure that good intentions translate into responsible practices throughout an AI system's existence.

These domains are particularly crucial because they represent the bridge between high-level governance principles and tangible outcomes. It's one thing to say we want responsible AI development - it's another to implement specific controls that ensure models are fair, transparent, and accountable. Similarly, while everyone agrees on the importance of good data practices, implementing systematic controls throughout the AI lifecycle requires careful attention to both technical detail and ethical implications.

Let’s go.

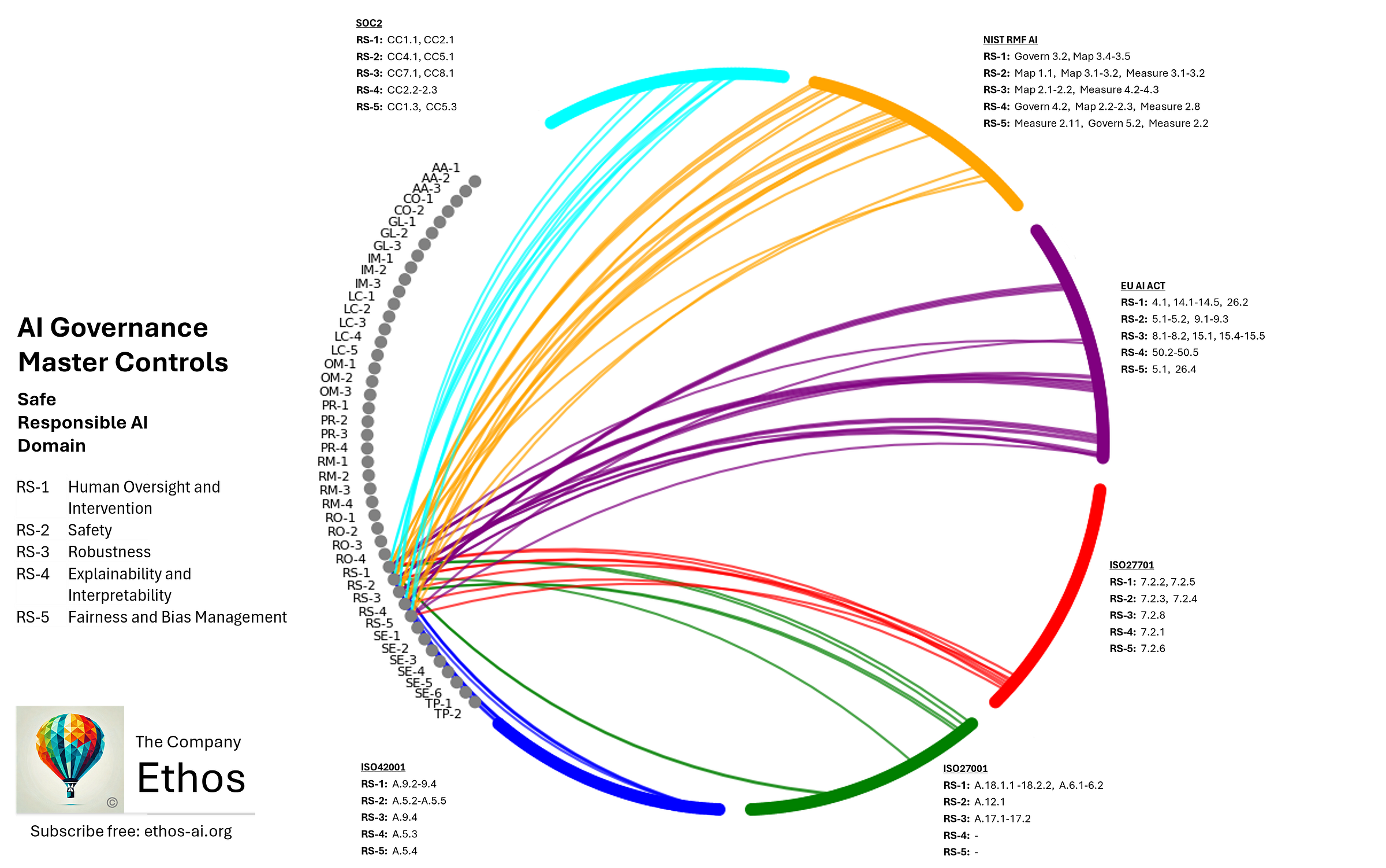

Safe, Responsible AI

RS-1 Human Oversight and Intervention

The organisation shall implement mechanisms for meaningful human oversight of AI systems, ensuring humans maintain appropriate control over AI decision-making. This includes clearly defined procedures for human monitoring, intervention capabilities, and authority to override AI systems when necessary. Personnel responsible for oversight must receive appropriate training and have sufficient competence and authority to fulfill their responsibilities effectively.

RS-2 Safety

The organisation shall establish and maintain processes to prevent AI systems from producing outputs that could cause harm to individuals, groups, or society. This includes comprehensive impact assessments, monitoring for potential harms, and implementation of safeguards to prevent prohibited uses or manipulative practices. The organisation must maintain documented evidence of harm prevention measures and regularly assess their effectiveness.

RS-3 Robustness

The organisation shall ensure AI systems demonstrate consistent and reliable performance across their intended operating conditions, including edge cases and unexpected scenarios. Systems must be resilient against errors, adversarial attacks, and data quality issues. Regular testing and monitoring shall be conducted to verify robustness, with particular attention to system behaviour under stress conditions or when encountering novel situations.

RS-4 Explainability and Interpretability

The organisation shall ensure AI systems' decisions and outputs can be appropriately explained and interpreted by relevant stakeholders. This includes maintaining comprehensive documentation of system behaviour, providing clear explanations of AI-driven decisions when required, and ensuring transparency about system capabilities and limitations. Methods for generating explanations must be appropriate to the context and audience.

RS-5 Fairness and Bias Management

The organisation shall implement processes to identify, assess, and mitigate unfair bias in AI systems throughout their lifecycle. This includes ensuring training data is representative and appropriate, regularly testing for disparate impact across protected characteristics, and maintaining documented evidence of fairness assessments and mitigation measures. The organisation must regularly validate that AI systems maintain fairness standards in operation.

Safe and Responsible AI is where we try to connect the ethical imperatives of AI to technical controls and mechanisms so we can build AI systems while minimising harm.

RS-1 Human Oversight and Intervention

At the core of this domain is human oversight. Effective oversight means that even when AI systems operate autonomously, humans remain in control of decision-making. ISO 42001 (A.9.2, A.9.3, A.9.4) outlines the need for mechanisms that ensure human intervention is possible at critical junctures, while ISO 27001 (A.18.1.1, A.18.2.2, A.6.1, A.6.2) provides the security framework that supports these controls, even if it doesn’t specifically reference AI. This is further extended by ISO 27701 (7.2.2, 7.2.5), which emphasises that personnel tasked with oversight must be sufficiently trained and competent. The EU AI Act (14.1–14.5, 26.2, 4.1) reinforces this requirement by mandating that human oversight, human-readable explanations and override capabilities are integral to high-risk AI systems. Moreover, NIST AI RMF (Govern 3.2, Map 3.4, Map 3.5) and SOC2 (CC1.1, CC2.1) stress that clear governance and documented accountability are essential to maintain control over AI development processes.

RS-2 Safety

Safety is next, ensuring that systems are designed to prevent outputs that could harm individuals, groups, or society at large. ISO 42001 (A.5.2, A.5.3, A.5.4, A.5.5) calls for comprehensive impact assessments and safety measures that address potential risks throughout the AI lifecycle. In tandem, ISO 27001 (A.12.1) provides the operational security foundation, while ISO 27701 (7.2.3, 7.2.4) adds privacy-focused safeguards. The EU AI Act (5.1, 5.2, 9.1–9.3) further demands rigorous safety controls for high-risk systems, and NIST AI RMF (Map 1.1, Map 3.1, Map 3.2, Measure 3.1, Measure 3.2) guides organisations in monitoring safety continuously. SOC2 (CC4.1, CC5.1) focuses on accountability for these efforts by ensuring that documented safety controls are both effective and auditable.

RS-3 Robustness

Robustness is about assuring ourselves that AI systems maintain consistent performance even under unexpected conditions. To reflect this, ISO 42001 (A.9.4) requires that systems be designed to be resilient against errors and adversarial inputs, while ISO 27001 (A.17.1, A.17.2) provides guidelines for maintaining system integrity under stress. The EU AI Act (8.1, 8.2, 15.1, 15.4, 15.5) stresses the importance of resilience in ensuring continuous safe operation, and NIST AI RMF (Map 2.1, Map 2.2, Measure 4.2, Measure 4.3) advises regular stress testing and monitoring. SOC2 (CC7.1, CC8.1) reinforces that a robust system must be capable not only of preventing failures but also of recovering gracefully when they occur.

RS-4 Explainability and Interpretability

With AI systems often operating as “black boxes,” it is vital that their decision-making processes can be understood by stakeholders. ISO 42001 (A.5.3) mandates that AI systems be designed to with transparency in mind, while ISO 27701 (7.2.1) further requires that these explanations be clear and accessible. The EU AI Act (50.2, 50.3, 50.4, 50.5) specifically calls for human-readable explanations of AI decisions, ensuring that users and regulators can comprehend system outputs. Additionally, NIST AI RMF (Govern 4.2, Map 2.2, Map 2.3, Measure 2.8) supports structured reporting and documentation of system behaviour, and SOC2 (CC2.2, CC2.3) emphasizes that explainability must be built into the governance process to enable effective oversight and redress.

RS-5 Fairness and Bias Management

Finally, fairness and bias management are indispensable to ensure that AI systems operate equitably. ISO 42001 (A.5.4) highlights the need for mechanisms that detect and mitigate bias throughout the AI lifecycle, while ISO 27701 (7.2.6) underlines the importance of fair data processing practices. The EU AI Act (5.1, 26.4) imposes strict requirements to prevent discriminatory outcomes in high-risk AI applications, and NIST AI RMF (Measure 2.11, Govern 5.2, Measure 2.2) advises regular assessments of fairness across different demographic groups. SOC2 (CC1.3, CC5.3) further mandates that any biases be documented and addressed, ensuring that AI systems produce outputs that are not only accurate but also socially responsible.

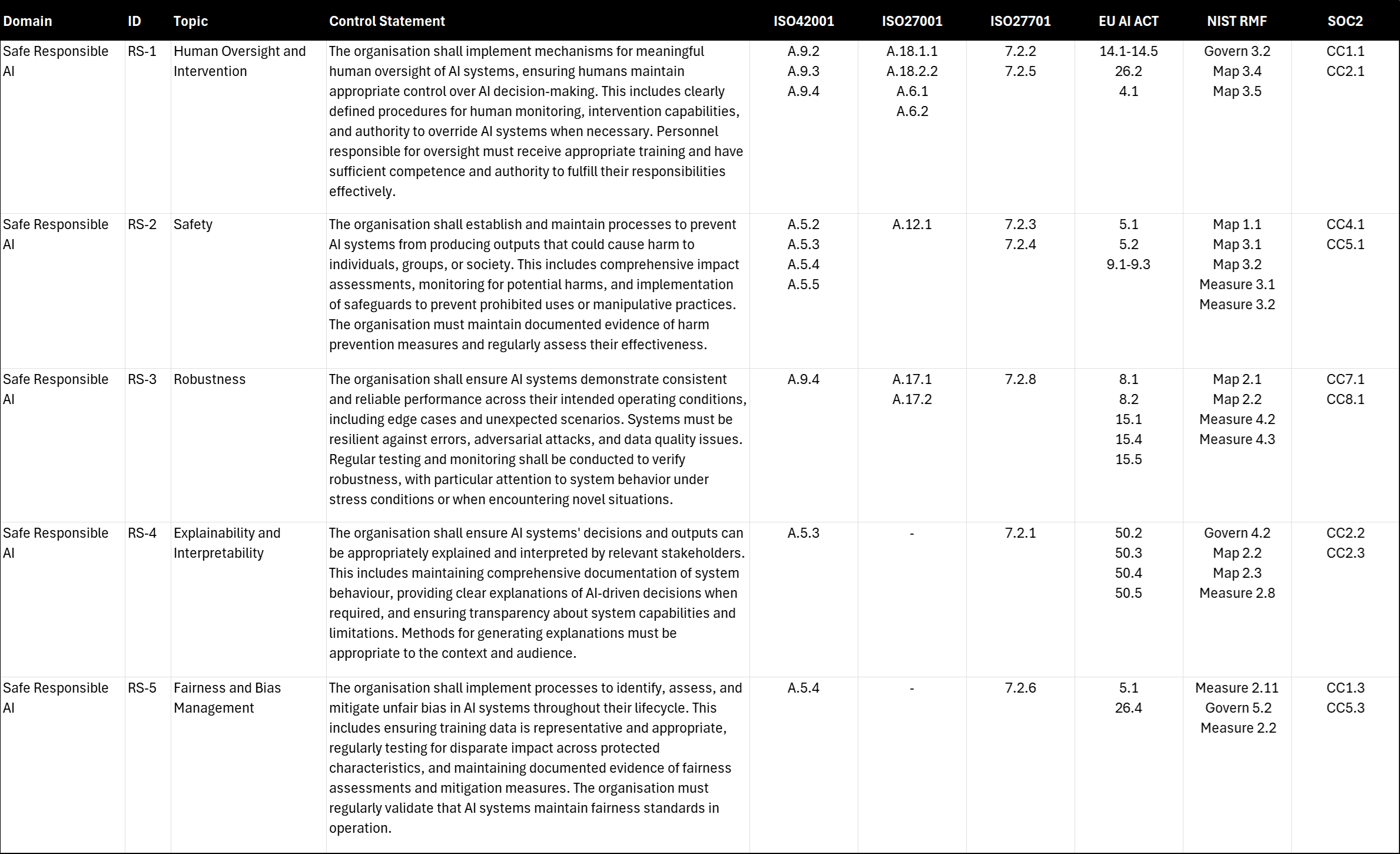

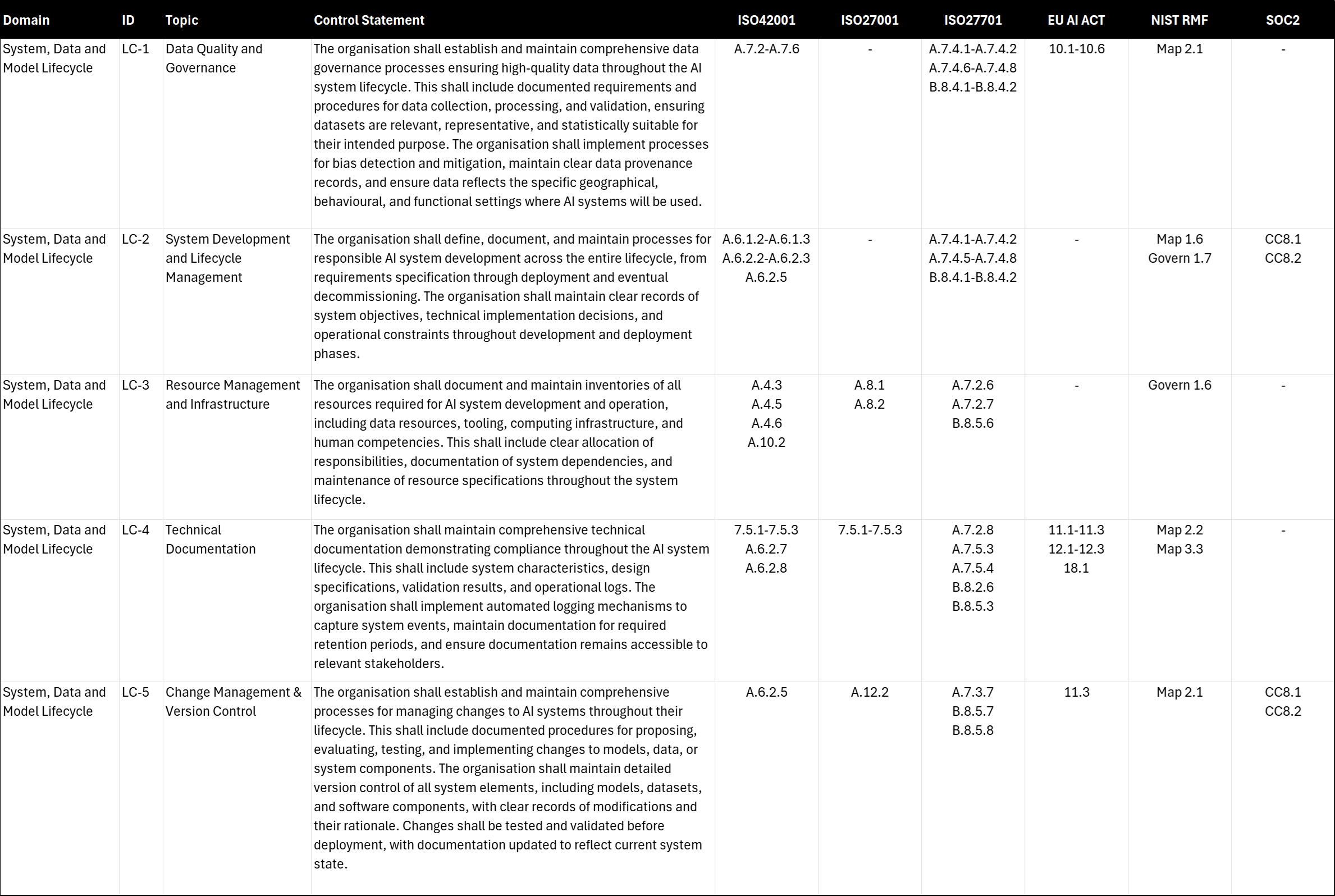

System, Data and Model Lifecycle

LC-1 Data Quality and Governance

The organisation shall establish and maintain comprehensive data governance processes ensuring high-quality data throughout the AI system lifecycle. This shall include documented requirements and procedures for data collection, processing, and validation, ensuring datasets are relevant, representative, and statistically suitable for their intended purpose. The organisation shall implement processes for bias detection and mitigation, maintain clear data provenance records, and ensure data reflects the specific geographical, behavioural, and functional settings where AI systems will be used.

LC-2 System Development and Lifecycle Management

The organisation shall define, document, and maintain processes for responsible AI system development across the entire lifecycle, from requirements specification through deployment and eventual decommissioning. The organisation shall maintain clear records of system objectives, technical implementation decisions, and operational constraints throughout development and deployment phases.

LC-3 Resource Management and Infrastructure

The organisation shall document and maintain inventories of all resources required for AI system development and operation, including data resources, tooling, computing infrastructure, and human competencies. This shall include clear allocation of responsibilities, documentation of system dependencies, and maintenance of resource specifications throughout the system lifecycle.

LC-4 Technical Documentation

The organisation shall maintain comprehensive technical documentation demonstrating compliance throughout the AI system lifecycle. This shall include system characteristics, design specifications, validation results, and operational logs. The organisation shall implement automated logging mechanisms to capture system events, maintain documentation for required retention periods, and ensure documentation remains accessible to relevant stakeholders.

LC-5 Change Management & Version Control

The organisation shall establish and maintain comprehensive processes for managing changes to AI systems throughout their lifecycle. This shall include documented procedures for proposing, evaluating, testing, and implementing changes to models, data, or system components. The organisation shall maintain detailed version control of all system elements, including models, datasets, and software components, with clear records of modifications and their rationale. Changes shall be tested and validated before deployment, with documentation updated to reflect current system state.

LC-1: Data Quality and Governance

One of the most significant factors influencing an AI system’s outcomes is the quality and governance of its underlying data. ISO 42001 (A.7.2–A.7.6) lays out processes to ensure that datasets are relevant, representative, and properly validated. Meanwhile, ISO 27701 (A.7.4.1–A.7.4.2, A.7.4.6–A.7.4.8, B.8.4.1–B.8.4.2) extends these requirements to privacy and data handling specifics, ensuring that personal data is collected, stored, and used ethically. The EU AI Act (Articles 10.1–10.6) describes the need to demonstrate that training data is appropriate for the real-world contexts in which AI systems operate—an especially critical requirement for high-risk systems that can impact human rights or safety. NIST RMF (Map 2.1) also emphasises mapping and measuring data risks, calling for bias detection and mitigation strategies. In practice, this means establishing data governance policies that document where data originates, how it’s processed, and how you make sure it remains accurate over time.

LC-2: System Development and Lifecycle Management

Developing an AI system is rarely a one-and-done process; it’s an iterative lifecycle (loosely following a pattern called MLOps) that spans initial requirements through deployment and eventual decommissioning. ISO 42001 (A.6.1.2–A.6.1.3, A.6.2.2–A.6.2.3, A.6.2.5) advocates for responsible AI design, requiring that organisations to document system objectives, technical implementation decisions, and operational constraints at each stage. ISO 27701 (A.7.4.1–A.7.4.2, A.7.4.5–A.7.4.8, B.8.4.1–B.8.4.2) extends these controls to incorporate privacy considerations into the development pipeline, ensuring personal data is handled appropriately from the outset. NIST RMF (Map 1.6, Govern 1.7) highlights how governance roles should be embedded throughout the lifecycle, while SOC2 (CC8.1, CC8.2) mandates clear accountability for design and testing decisions. In practical terms, this translates into a documented, step-by-step process—requirements gathering, system design, testing, validation, deployment, and retirement—where every decision is recorded. It isn’t just about record-keeping for accountability but also about detecting and rectifying issues before they become entrenched.

LC-3: Resource Management and Infrastructure

Building AI systems needs more than just data and algorithms — any system has an array of resources, from specialised tooling to skilled personnel. ISO 42001 (A.4.3, A.4.5, A.4.6, A.10.2) calls for inventories of data resources, software tools, computing infrastructure, and human competencies. ISO 27001 (A.8.1, A.8.2) complements this by emphasising asset management, ensuring that organisations can track hardware, software, and information assets. ISO 27701 (A.7.2.6, A.7.2.7, B.8.5.6) adds privacy-related requirements for resource documentation. In the NIST AI RMF (Govern 1.6), you see a parallel emphasis on ensuring that the organisation’s risk tolerance is matched by the available resources—especially crucial when dealing with high-stakes AI applications. In practice, resource management extends beyond hardware and software, into clarifying roles and responsibilities, and maintaining a knowledge base of system dependencies. You might want to look at my articles on how to build an AI inventory for guidance around this set of controls1

LC-4: Technical Documentation

Documentation is often seen as the “paper trail” of AI development, but modern frameworks treat it as a living resource that underpins transparency and accountability. ISO 42001 (7.5.1–7.5.3, A.6.2.7, A.6.2.8) and ISO 27001 (7.5.1–7.5.3) both require organisations to maintain technical documentation covering system characteristics, design decisions, validation results, and operational logs. ISO 27701 (A.7.2.8, A.7.5.3, A.7.5.4, B.8.2.6, B.8.5.3) adds privacy-specific details, ensuring that documentation reflects how personal data is processed and protected. The EU AI Act (Articles 11.1–11.3, 12.1–12.3, 18.1) stresses documentation for high-risk AI systems, requiring proof of compliance at every stage. Meanwhile, NIST RMF (Map 2.2, Map 3.3) highlights how documentation supports mapping and measuring AI risks, while also informing ongoing system monitoring. Implementing these controls effectively means putting in place automated logging mechanisms that capture events throughout the AI lifecycle—model training sessions, data updates, and system performance metrics. When changes or incidents occur, you have a clear record of what happened and why. Such living documentation not only facilitates internal reviews but can also provide evidence of compliance during external audits or regulatory inquiries.

LC-5: Change Management & Version Control

Finally, AI systems change the moment they are put into production; they evolve through model retraining, data refreshes, or software updates. ISO 42001 (A.6.2.5) calls for robust change management processes to handle these updates, while ISO 27001 (A.12.2) outlines procedures for secure software development and configuration management. ISO 27701 (A.7.3.7, B.8.5.7, B.8.5.8) extends these principles to data privacy, ensuring that model changes do not inadvertently violate data protection requirements. The EU AI Act (Article 11.3) hints at similar expectations for high-risk systems, where any significant modification could alter compliance status. NIST RMF (Map 2.1) emphasises that each change should be mapped to a risk assessment, ensuring that new vulnerabilities or biases are not introduced. SOC2 (CC8.1, CC8.2) echoes these controls by focusing on rigorous version control and documented change approvals. Basically, you have to maintain a central repository for all AI assets—models, datasets, and software components—with version tags and detailed change logs. Before deploying an updated model, your teams need to perform thorough testing to validate its performance, mitigate risks, and update documentation accordingly.

Phew! We've now covered more than half of our journey through AI governance control domains, moving from the foundational elements of leadership and risk management through to the critical safeguards that ensure AI systems are developed responsibly and ethically. These are the bedrock of trustworthy AI, the guardrails that keep innovation on track while protecting from harm.

But building is only half the story. Even your most carefully designed systems will encounter unexpected challenges once they bang into the real world. That's why my next article delves into what I think is probably the most crucial phase: operational management. We'll explore the requirements to maintain vigilance over AI systems in production, highlight the requirements for robust post-market monitoring frameworks and other forms of monitoring that can detect subtle signs of degradation before they become critical issues.

Then, we'll go into the wicked requirements of incident management – what happens when things don't just deviate from the plan, but go wrong in a big way. Effective AI governance isn't just about preventing problems; it's about having clear, tested procedures for responding when serious issues arise. That’s when you discover if you really have the high-integrity AI governance practices you need - whether you have the resilient response mechanisms that can help you identify, contain, and learn from AI incidents, turning potential crises into opportunities for system improvement.

Thank you for reading.

Full Control Map Download

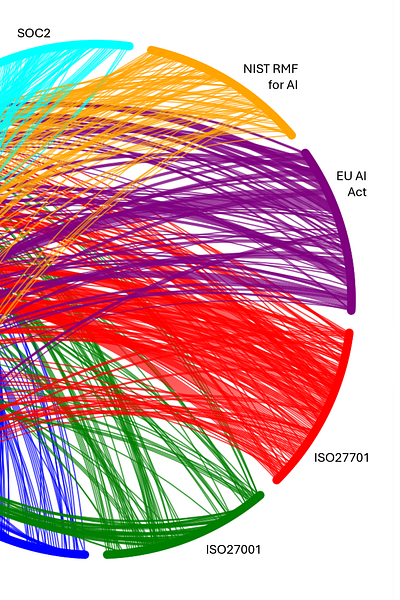

You can download the controls map in an Excel spreadsheet below. In the final article of this series though, I’ll share with you a full open-source repository of charts, mapping in JSON and OSCAL format, and python code so that you can modify this spreadsheet to your own needs, expand the frameworks included, generate mappings for export, and create chord diagrams like I’ve provided here. Please do subscribe, so I can notify you of that release.

I’m making this resource available under Creative Commons Attribution 4.0 International License (CC BY 4.0), so you can copy and redistribute in any format you like, adapt and build upon it (even for commercial purposes), use it as part of larger works, modify it to suit their needs. I only ask that you attribute it to The Company Ethos, so others can know where to get up to date versions and supporting resources.